Prerequisite

- Ubuntu 18.04.3 Linux Operating System

- Java 8(JDK 1.8 or later version)

Walk-through

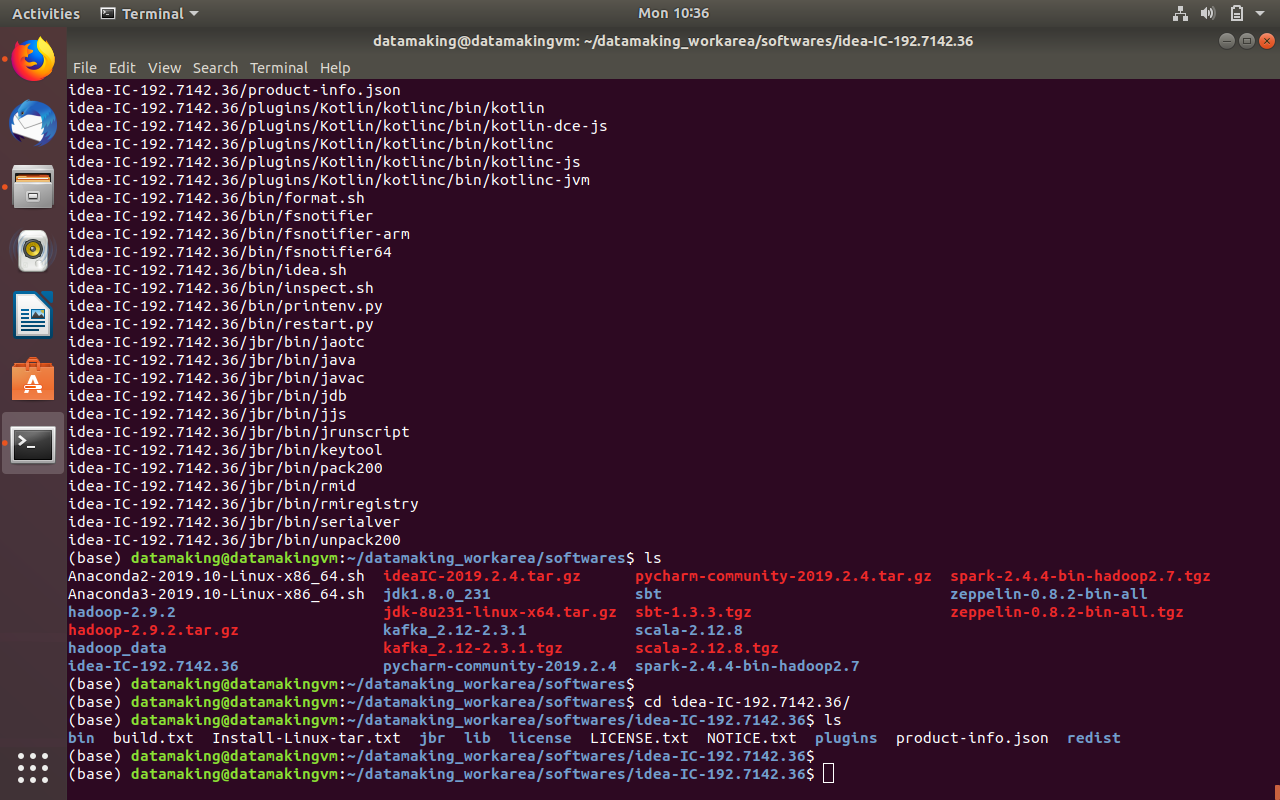

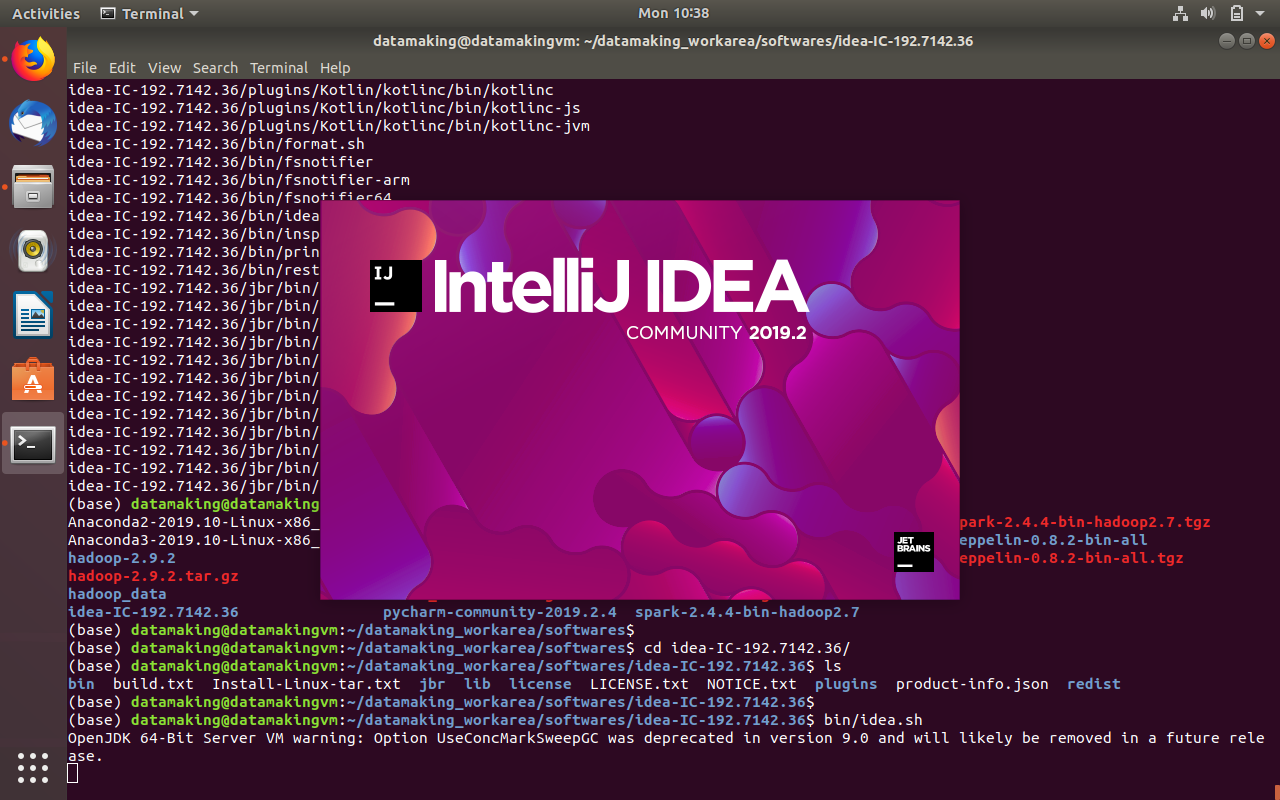

In this article, I am going to walk-through various steps to create first Apache Spark Application using IntelliJ IDEA in Ubuntu 18.04.3.Step 1: Download IntelliJ IDEA Community Edition

Search for "intellij idea download" from www.google.com

package com.datamaking.apachespark101 import org.apache.spark.sql.SparkSession object create_first_app_apachespark101_part_1 { def main(args: Array[String]): Unit = { println("Started ...") println("First Apache Spark 2.4.4 Application using IntelliJ IDEA in Ubuntu 18.04.3 | Apache Spark 101 Tutorial | Scala API | Part 2") val spark = SparkSession .builder .appName("Apache Spark 101 Tutorial | Part 1") .master("local[*]") .getOrCreate() spark.sparkContext.setLogLevel("ERROR") val tech_names_list = List("spark1", "spark2", "spark3", "hadoop1", "hadoop2", "spark4") val names_rdd = spark.sparkContext.parallelize(tech_names_list, 3) val names_upper_case_rdd = names_rdd.map(ele => ele.toUpperCase()) names_upper_case_rdd.collect().foreach(println) spark.stop() println("Completed.") } }

Summary

In this article, we have successfully installed IntelliJ IDEA Community Edition in Ubuntu 18.04.3 and ran the first Apache Spark Application. Please post your feedback and queries if you have anything. Thank you.Happy Learning !!!

0 Comments